Sticker: A 0.41-62.1 TOPS/W 8Bit Neural Network Processor with Multi-Sparsity Compatible Convolution Arrays and Online Tuning Acceleration for Fully Connected Layers | Semantic Scholar

![VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture](https://t1.daumcdn.net/cfile/tistory/993DBA3E5BAF92CE38)

VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture

![PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/a2e283532b71e9b6af7addb3b3f4f4a1af6e0fb4/2-Figure1-1.png)

PDF] A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar

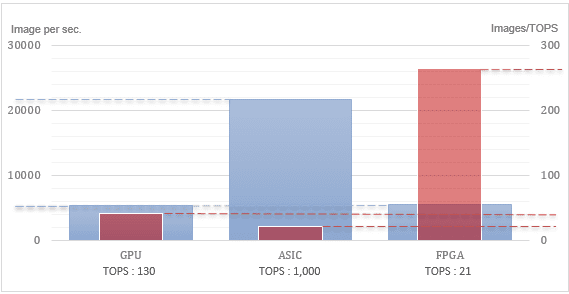

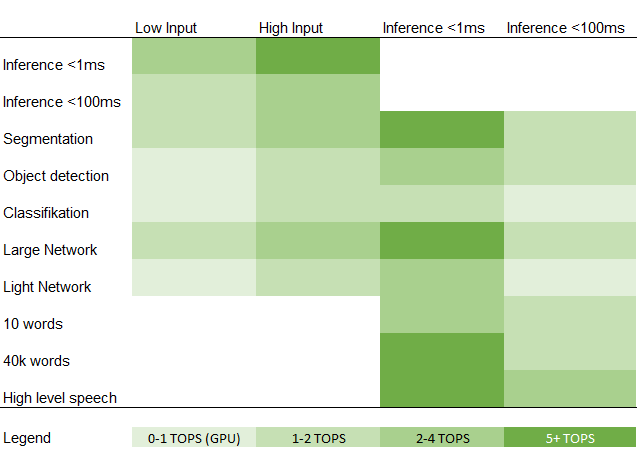

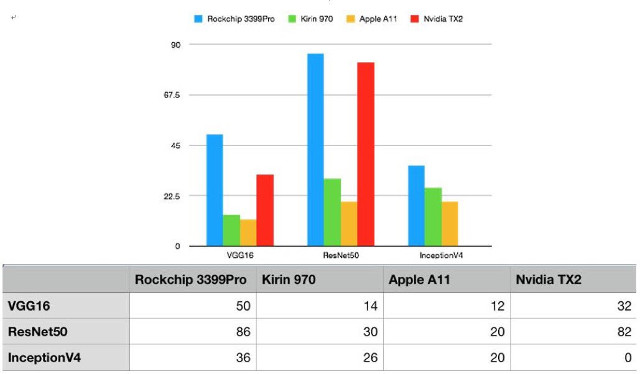

Not all TOPs are created equal. Deep Learning processor companies often… | by Forrest Iandola | Analytics Vidhya | Medium

Khadas on Twitter: "We made a mistake quoting the #VIM3 NPU performance. It is actually 5.0 TOPS! #khadas #amlogic #a311d #npu https://t.co/UEu0Iafo3E" / Twitter

Sticker: A 0.41-62.1 TOPS/W 8Bit Neural Network Processor with Multi-Sparsity Compatible Convolution Arrays and Online Tuning Acceleration for Fully Connected Layers | Semantic Scholar

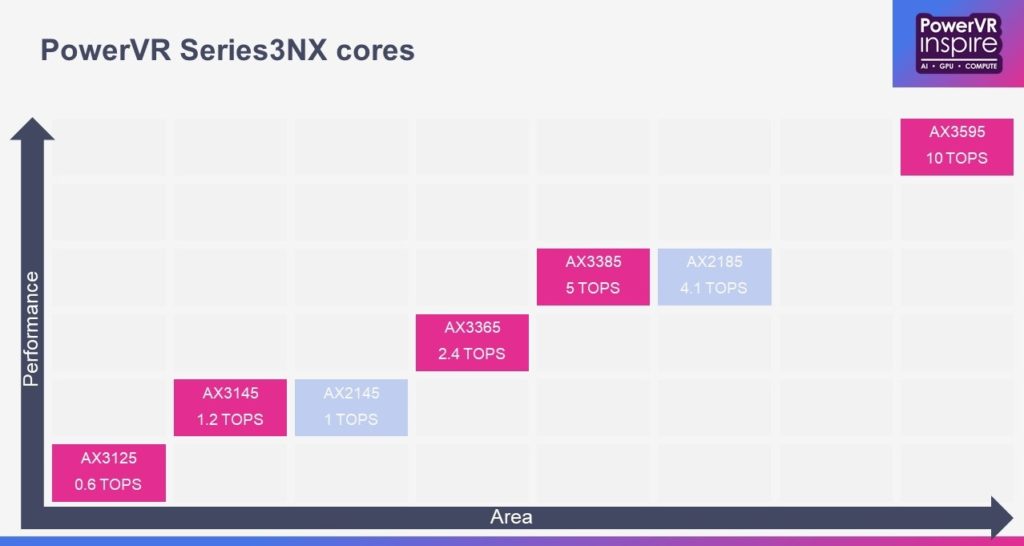

Rockchip RK3399Pro SoC Integrates a 2.4 TOPS Neural Network Processing Unit for Artificial Intelligence Applications - CNX Software

A 0.11 pJ/Op, 0.32-128 TOPS, Scalable Multi-Chip-Module-based Deep Neural Network Accelerator Designed with a High-Productivity VLSI Methodology | Research